This article is part 3 of a series about the development of Duotrigordle. Click here to read Part 1 and Part 2.

This article will focus on behind-the-scenes technical details about Duotrigordle, including the languages and technologies I used to build the website, as well as some lessons about web development that I learned from this experience.

The tech sandwich

Any modern website is built on layers of existing technology, and Duotrigordle is no exception.

For those curious, here are the technologies I chose to use to build my site:

Frontend

- TypeScript — Any web developer worth their salt is using TypeScript these days, and who wouldn't? Static typing is awesome.

- React — The most popular library for building websites, and also what I'm most familiar with.

- Redux — Although Redux is often criticized for being too complex and overly structured, I actually find it quite simple to use once you understand its underlying philosophy. Since Duotrigordle is a game, it leans more on global state compared to most websites, which fits naturally with Redux's pattern of a global store.

- CSS Modules — Duotrigordle isn't a particularly large website, so writing raw CSS works perfectly fine. The CSS modules scoping feature is very useful for preventing name collisions.

- Jest — A popular testing framework written by the same people who created React, I use Jest mostly to test the Redux store and game logic.

Backend

- Rust — I've been a big proponent of Rust since 2021, so when it came time to write the backend for Duotrigordle I figured I should put my money where my mouth is and write it in Rust. It worked out great: the backend runs fast with a small memory footprint, and of course writing code in Rust is a delight.

- Axum — I think there are a few other popular Rust web frameworks, but Axum was created by the same team as the Tokio library which probably means it will have good support in the future. I ran into some typing issues since the library makes heavy use of complicated traits and generic code, but after I figured those out it was mostly smooth sailing.

- PostgreSQL — I didn't know much about SQL databases and Postgres seemed popular so I went with it. Works great.

- Python — I use the pytest framework to write end-to-end tests for the backend. Although writing tests gets kind of tedious at times, they've helped me catch a bunch of bugs in my backend.

Infrastructure

- Docker — I use Docker to package my code, then on the server I use Docker Compose to orchestrate the containers that run the website. If you haven't used containerization before, I recommend giving it a try as it really does simplify the code build & deploy process.

- Caddy — Caddy is an HTTP server that automatically handles HTTPS/TLS certification and renewal, great if your backend setup is simple.

- Digital Ocean — Digital Ocean is my cloud host of choice, though I haven't really tried anything else so I don't know how it compares to other providers. Duotrigordle runs on a single droplet virtual machine.

Overall this is a relatively standard website tech stack, perhaps with the exception of Rust as a slightly less common backend choice. Duotrigordle is not a technically complex project, thus a simple tech stack worked great for my needs.

Lessons learned

By working on Duotrigordle, I've learned many things about full-stack development on a production website. Below are a few of these lessons in no particular order:

Store account data local-first

This advice is mostly for websites where logging in with an account is optional.

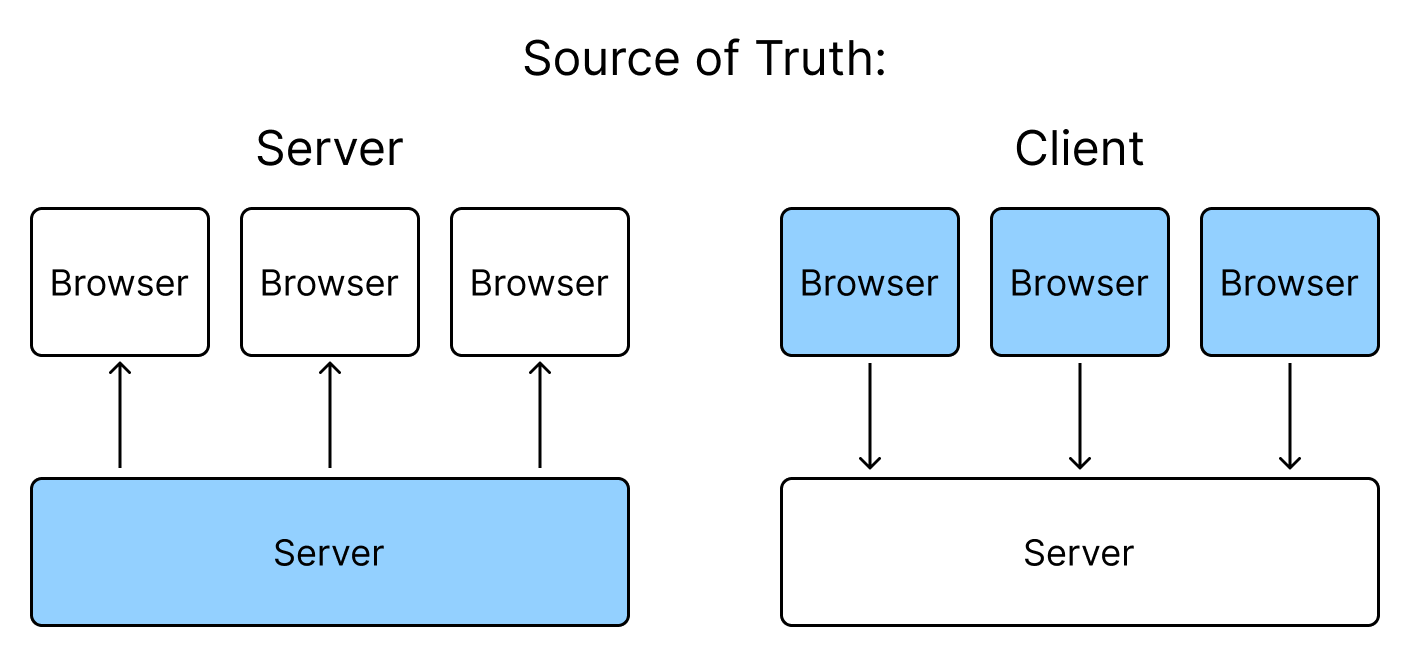

Most of the time when you have a website with user accounts and some kind of persistent data, it's tempting to store the data "server-side first", where you primarily store the user's data in the server and send updates to the server every time the data changes.

However, another approach is to store the data "client-side first" and treat the server as more of a backup of the user's data. Thus, each browser owns a slice of the full data, and the server contains a full aggregated view of all the data.

The main difference in these techniques is where the source of truth for your user's data lives. When the data on your server conflicts with the data in the browser, which one do you show to your user?

Making the browser's local data the source of truth has several advantages:

- This model works naturally for optional user accounts: since data is primarily stored in the browser anyways, there's no need to handle data differently between logged-in and logged-out users.

- It also makes handling offline mode easy, simply store all changes locally and asynchronously send changes to the server when the connection returns.

- It makes logging out and migrating accounts easy, since the browser contains a full set of its own data anyways, it's easy to move that data to a new account.

- It partially migitates server crashes / data loss, since your users will have a local copy of your data at all times.

Duotrigordle uses a client-side first model, where each browser contains a full list of all game scores created from that device. When you log in, all the stats in that browser are synchronized to the server. Then when you go to look at your stats page, the data from the local browser is combined with the data from the server to form a full view of the user's stats (theoretically merging the data should be redundant since the server should contain the full client data, but this makes the system more resilient to data corruption in the backend).

Note that this model works best when it's possible for each client to separately hold a slice of the full data (e.g. a subset of all the user's scores). If it is important for each client to have an up-to-date view of all the data, it may be better to consider a server-side first approach.

Minimize rerenders in React

Although it may not seem like it, rendering the Duotrigordle game is surprisingly computationally complex. Each board has up to letters, and there are boards for a total of up to letters rendered at a time.

On its own, rendering 5920 components isn't infeasible for React, but if done naively this can lead to slow gameplay, in particular when the user is typing. As a comparison, compare Duotrigordle to Sexagintaquattuordle, which (as of writing) is a fair bit less optimized, leading to sluggish feedback when typing.

When writing the board component the first approach I tried looked something like this:

function Board({ id }) {

const words = useAppSelector((state) => state.board[id]);

const input = useAppSelector((state) => state.input);

return (

<div>

{words.map((word) => (

<Word word={word} />

))}

<Word word={input} />

</div>

);

}

function Word({ word }) {

return (

<div>

{word.map((letter) => (

<span>{letter}</span>

))}

</div>

);

}This works fine, but has one problem: Every time the user types, the entire <Board/>

component has to re-render, which involves rendering all the words… even

though we know that only the input is the part that is changing!

This led to relatively sluggish gameplay, since up to 5920 components would need to be re-rendered on every keystroke.

The solution is to extract the input row into its own component, that way when the user types, only the input component needs to re-render, minimizing the number of re-rendered letters from 5920 to only 160 (5 input letters per board).

In addition, a little bit of React.memo prevents child components from re-rendering

if its props don't change. This is useful when you guess a new word, only

the added word needs to be rerendered as all the previously rendered words are

memoized and don't need to be rendered again.

function Board({ id }) {

const words = useAppSelector((state) => state.board[id]);

return (

<div>

{words.map((word) => (

<Word word={word} />

))}

<Input />

</div>

);

}

// Only this component re-renders when the user types!

function Input() {

const input = useAppSelector((state) => state.input);

return <Word word={input} />;

}

// This component only re-renders when the props change

const Word = React.memo(function ({ word }) {

return (

<div>

{word.map((letter) => (

<span>{letter}</span>

))}

</div>

);

});The lesson is that if you understand the React rendering model, you can optimize your component tree to minimize re-renders which in some cases can vastly improve your website's performance.

Write (integration) tests

As a developer, this should be something you already know. I found the process of writing tests to be a valuable use of time, in particular for the backend.

I find backend tests to be a little more important than frontend tests since bugs in your backend code often lead to more catastrophic outcomes: frontend bugs shouldn't lead to data corruption / loss in your database, unless you're doing something horribly wrong.

Alongside my rust backend code I maintain a fairly large test suite written in python that tests each endpoint of my HTTP API. Since I use Docker, I can spin up an entire instance of my backend code (including the database) with a single Docker compose configuration.

On multiple occasions, these tests have caught major bugs that would've been a headache to resolve if they were shipped to production.

Back up your databases

If you're running any kind of production software with a database, MAKE SURE to set up a backup system for your database!

If your project isn't very large, all you need is a spare machine with some storage and a script that periodically downloads a copy of your database. If you're using Postgres like I am, pg_dump is your friend.

Perhaps just as importantly, make sure you're able to actually recover your database from the backup: every now and then, try loading your backup into a staging or development environment to make sure that your backups work correctly. Don't be like GitLab, who lost 6 hours of production data due to a faulty backup system.

On more than one occasion, I've wiped all the data from my production machine by accident (see below section). Luckily because of my backup system, I was able to recover the vast majority of the data (I've likely lost a couple minutes of production data this way, if your streak broke because of this I'm very sorry).

Don't run a production database inside Docker

I'm not sure if this is a common occurrence, but I've had multiple instances where

my Postgres Docker volume got corrupted after running apt upgrade, even though

the container wasn't running.

Due to trust issues, I'm now running Postgres directly on my machine without containerization. I still run my applications inside containers, they directly access Postgres through Docker host gateway.

Click here to read Part 1 about the history of features added to the game, or Part 2 about adding advertising to Duotrigordle.